Looking under the hood to understand the basic concepts behind ChatGPT

There’s so much excitement, anticipation, and anxiety about ChatGPT recently, but not really much that explains how it works. For sure, there are a lot of technical papers and such, but for the average man in the street, they can be a bit daunting.

This article aims to present a simple view of how ChatGPT and related AI technologies work without getting too much into the technical details. Inevitably there will be jargon, but I will try to explain the jargon in a way that is easier to understand. This seems like a farfetched goal (how to tell without telling), but I will give it a good try.

ChatGPT algorithm

Let’s start by explaining what ChatGPT is and how the ChatGPT algorithm works.

ChatGPT

ChatGPT is a chatbot, which is a computer program that can simulate conversations with human users. Chatbots use natural language processing (NLP) to understand the user’s input and generate a response relevant to the user’s question or request. NLP is a field of AI focused on the interaction between computers and human language.

ChatGPT uses GPT (Generative Pre-trained Transformer), a large language model created by OpenAI. Besides ChatGPT, there are other similar chatbots, including Bard by Google and Claude by Anthropic. Other well-known chatbots include Siri by Apple, Alexa by Amazon, and Google Assistant by Google.

Large language models

Large language models (LLMs) are AI models that learn and generate human language. LLMs are a core part of NLP. Some of the LLMs you might have heard about are GPT by OpenAI, LaMDA (Language Model for Dialogue Applications) by Google, and LLaMA (Large Language Model Meta AI) by Meta. These models learn from a large amount of text data and then use what they’ve learned to generate or understand new text.

The basic idea behind LLMs is that it predicts the next word in sequence using the words that came before it.

For example, if you have this sequence of words:

A quick brown fox jumps over the lazy

The LLM will predict the next word:

A quick brown fox jumps over the lazy dog

It does this using an AI technique called machine learning.

Machine Learning

Machine learning is a family of AI algorithms that involves ingesting large amounts of data which is then used to train an AI model to make decisions.

Let’s say you want to teach a child what a dog looks like. You might show him many pictures of different dogs. After seeing enough pictures of dogs, the child starts to understand what characteristics all dogs tend to have — they have four legs, and a tail, they can be different sizes, but their faces have similar structures, and so on. When the child sees a dog they’ve never seen, he can tell it’s a dog because of the patterns he has learned.

Machine learning works in a similar way. Let’s say we want to predict the next word after a sequence of words. We start by giving the computer a large amount of text data. This could be books, articles, websites, or anything with words. During its training, the model learns the patterns of a language. This is known as training data. It learns that certain words often go together (like “brown” and “fox”) and that there are rules in language we usually follow (like we usually put adjectives before the nouns they describe). Eventually, these patterns become a model — a set of learned rules that it can use to predict the next word.

The basic building block of machine learning algorithms used in LLMs is the neural network.

Neural Network

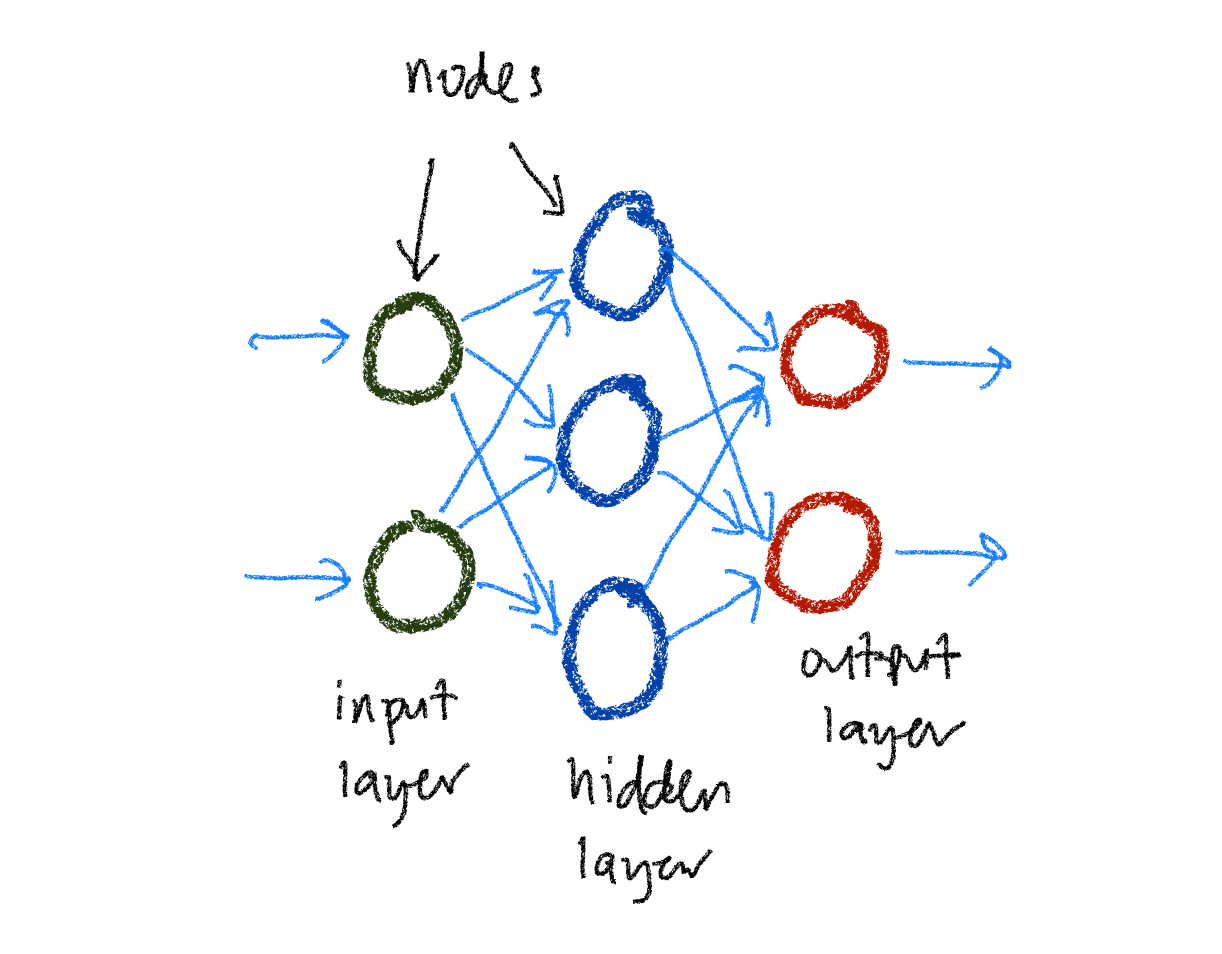

A neural network is a machine learning algorithm that works like how we think the human brain works. It’s made up of lots of little parts called nodes or neurons, which are grouped into layers that work together to learn from data.

The learning stage of a neural network occurs during a process called training. Let’s take the earlier example that we want to predict the next word in a sentence.

First, before we start training, we need to split the training data into sequences of certain lengths, say 10 words, to predict the next word after the sequence.

Next, we convert the words in the sequences into vectors, essentially a list of numbers. This process is called embedding, and the vectors are sometimes called embeddings or word vectors. Words that are similar to each other have vectors that are similar to each other.

To train the neural network, we feed the sequences of embeddings into it one at a time. After each word, the neural network updates its internal state, consisting of nodes grouped in layers according to what it has learned. When all the embeddings in a sequence have been fed into the neural network, we ask it to make a prediction.

The predicted embedding is compared to the actual embedding for the next word in the sequence. The difference between these embeddings is the prediction error, which is used to adjust the internal state of the neural network through a process called back-propagation. The adjustments are made in a way that would make the predicted embedding closer to the actual embedding.

This process repeats, and the neural network is trained with all the training data until it gets really good at figuring out the next word in a sentence. After the training is done, the model now be asked to predict. The process of asking a model to make predictions is called inference.

Early neural networks had a small number of nodes and layers. However, as more data became available for training and more sophisticated ways of organizing the nodes were invented, the number of nodes and layers became huge, numbering in millions and billions. Another term for algorithms that has such large neural networks is deep learning. The word deep in deep learning refers to the number of layers in the hidden layers.

Earlier neural network algorithms like recurrent neural networks (RNN) and long short-term memory (LSTM) networks were often used in NLP, but they have instability issues with very long text sequences.

In 2017, Google released a paper introducing the transformer, a type of neural network that improved NLP tremendously, and suddenly everything changed.

Transformers

Say you’re chatting with a group of friends about planning a movie night. When one of your friends says something, you don’t just understand their words based on what they immediately just said because you consider the whole conversation — what movie you’ve been talking about, who’s available when, what snacks you plan to get, and so on. Transformers do something similar, especially when dealing with language.

A transformer is a type of neural network algorithm that’s especially good at handling context in data. It’s not limited to considering the piece of data it’s currently processing (like a word in a sentence) and the one that immediately preceded it. Instead, it can consider all the data points (all the words in the sentence), figure out which ones are most relevant to the current data point (word), and use those to help it better understand the current data point.

Let’s use a sentence as an example:

Even though I already ate dinner, I’m still hungry.

When a transformer tries to understand the word hungry, it doesn’t just look at the immediately preceding words like I’m still. It also considers ate dinner from earlier in the sentence because it’s relevant to understanding why someone might be hungry.

This ability comes from the attention mechanism that transformers use, which allows them to pay attention to different parts of the input data based on their relevance. This makes transformers great for tasks like machine translation and text generation, where understanding the full context of the input data is crucial.

All LLMs created recently, including GPT, are based on transformers.

Tokens

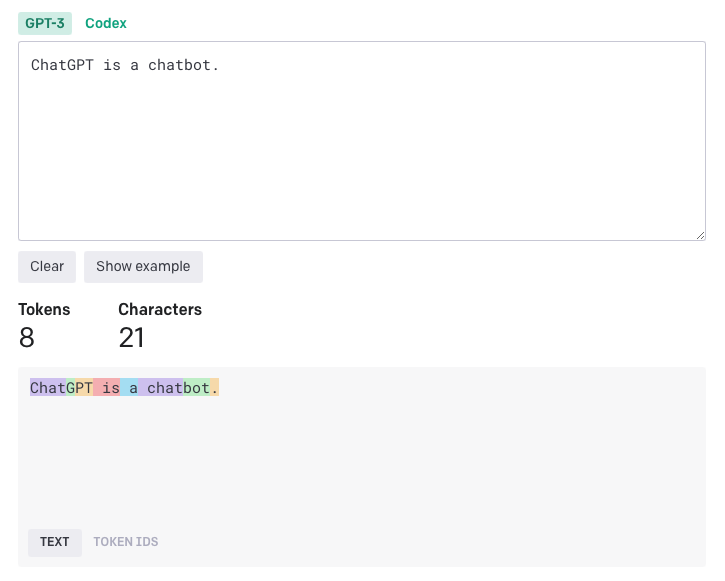

We have been talking about words in a sentence for training or inference, but actually, LLMs don’t operate on words. Instead, they use tokens.

A token is a chunk of text. Common and short words typically correspond to a single token. Long and less commonly used words are generally broken up into several tokens. You can go to OpenAI’s Tokenizer, enter your text, and see how it gets split up into tokens.

You may be wondering why the words are tokenized this way.

Let’s say we use each character as a token. That makes breaking up the text into tokens and keeps the total number of different tokens small. However, we can’t encode nearly as much information. In the example above, 8 tokens can only encode ChatGPT while 8 OpenAI tokens can encode the whole sentence. Current LLMs have a limit to the maximum number of tokens that they can receive, so we want to pack as much information as possible in each token.

What if each word is a token? Compared to OpenAI’s approach, we would only need 5 tokens to represent the same sentence, which is more efficient. However, LLMs need to have a complete list of tokens they might encounter, and this method cannot deal with made-up words (very common in fiction) or domain-specific words (very common in technical documents).

Training ChatGPT

Now that we understand the ChatGPT algorithm let’s understand how it was trained. Let’s start with some basics of supervised and unsupervised learning.

Supervised learning is a type of machine learning where we teach the model by providing input data and the correct output. Let’s say you want to train a model to classify news articles into different categories, such as business, sports, or entertainment. You would start by collecting a dataset of labeled news articles. For each article, you would manually label it with the appropriate category. Once you have the dataset, you can use it to train the model.

Unsupervised learning, on the other hand, deals with unlabeled data. The model is provided with inputs, but there are no explicit correct outputs. The model must find structure in the inputs on its own. An example is clustering, where the model groups similar data together.

Prior to GPT, most NLP models were trained using supervised learning for specific purposes, like text classification or sentiment analysis, and so on. The problem is that it is difficult to find large amounts of labeled data. Also, these models become very specialized and can only be used for the purpose it was trained for.

GPT, however, is first pre-trained using unsupervised learning on unlabelled data, then fine-tuned using supervised learning for specific tasks.

Fine-tuning

In machine learning, there’s a concept known as transfer learning. The idea is that you can take a model that’s been trained on one task and use it as a starting point for a related task. This is very useful because training these models from scratch can require a lot of data and computational resources.

Fine-tuning is a specific type of transfer learning. In the context of GPT, fine-tuning involves taking the model that’s already been trained on a lot of text data (the pre-training phase) and then training it further on a more specific task.

For example, let’s say you have a GPT model pre-trained on a large amount of internet text. Now, you want to create a chatbot that advises on healthy eating. There’s a lot of information that GPT has learned about language from its pre-training, but it might not be very good at the specific task of giving diet advice.

So, you gather a dataset of conversations where people give good advice on healthy eating. You then take your pre-trained GPT model and fine-tune it on this new dataset. The model has now learned from your specific diet advice conversations, adjusting its parameters slightly to get better at this task.

In essence, fine-tuning allows us to customize a general-purpose model for specific tasks, making it more useful and efficient for different purposes. The advantage is that we don’t have to train a complex model like GPT from scratch, which can save significant time, data, and computational resources.

Also, GPT models that were only pre-trained and not fine-tuned turned out to be quite powerful on their own. Models that are trained and not fine-tuned are called foundation models.

Different types of models

Models can be trained (or foundation models can be fine-tuned) for different tasks. For example, if you’re using a completion model, you might give it a prompt like this

Once upon a time, in a kingdom far away

The model takes that prompt and generates the rest of the text like this:

Once upon a time, in a kingdom far away, there was a brave knight and

a fierce dragon.

Most language models are minimally completion models.

A conversational model (like ChatGPT) is trained using conversational data, such as dialogue from books, scripts, or transcriptions of spoken conversations. This helps the model understand the back-and-forth nature of conversations, including how responses relate to previous messages.

An instruction model (like InstructGPT) is trained to understand and respond to human instructions. This might involve training data that includes commands followed by actions or prompts followed by appropriate responses.

A question-and-answer (Q&A) model is trained on data that includes questions paired with their answers, such as data from Q&A websites, textbooks, or other educational resources. This helps the model learn to provide informative and accurate answers to direct questions.

ChatGPT training

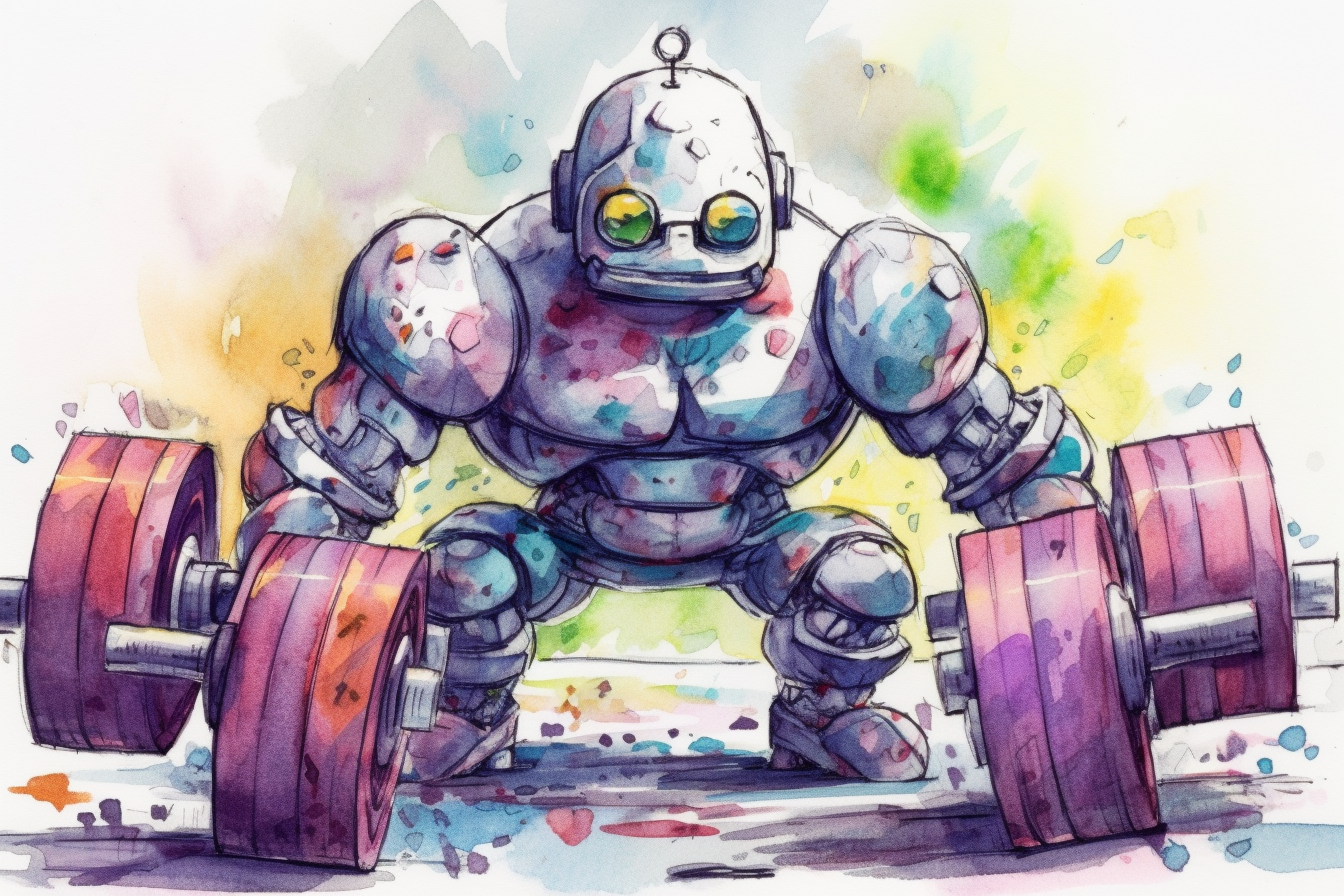

ChatGPT is based on GPT-3.5 and then fine-tuned twice — first using supervised learning and then using reinforcement learning.

In the first step, supervised fine-tuning (SFT), human AI trainers provide conversations in which they play both sides — the user and an AI assistant. The trainers are given model-written suggestions to help them compose their responses. This new dataset is used to fine-tune ChatGPT.

In the second step, ChatGPT is fine-tuned using a technique called reinforcement learning with human feedback (RLHF).

Reinforcement learning is a type of machine learning where an agent (in this case, ChatGPT) learns to behave in an environment by trial and error. The agent receives rewards for actions that lead to desired outcomes and punishments for actions that lead to undesired outcomes. Over time, the agent learns to take actions that maximize its rewards.

AI trainers have conversations with ChatGPT using the same prompts to produce several alternative completions. The AI trainers rank these completions to train a reward model. In reinforcement learning, a reward model is a way of giving feedback to the agent about how well it’s doing. It takes in an action and tells how good or bad it is. The goal of the reward model is to learn how to maximize the sum of rewards over time.

Finally, ChatGPT is fine-tuned to generate outputs that get high ratings according to this reward model.

Using ChatGPT

You could think it’s rather pointless to discuss using ChatGPT because it’s a chatbot, and you just chat with it. That is not entirely wrong, but it might not be the best way to use ChatGPT. If you’re not careful, you could get false and misleading information.

Hallucination

Occasionally LLMs produce wrong or nonsensical results, even though they can be presented confidently. This is known as hallucination.

LLMs hallucinate for a few reasons. First, these models are trained on massive amounts of text data from the Internet, which contains both accurate and inaccurate information. While they learn a lot from this data, they can sometimes generate factually incorrect responses or make things up.

Second, LLMs don’t have a real understanding or common sense as humans do. They operate based on patterns in the data they were trained on. So, if they encounter a question or topic they haven’t learned about, they might try to generate a response that sounds plausible but is actually made up.

Additionally, LLMs can be sensitive to slight changes in input phrasing, leading to variations in their responses. Sometimes, these variations can result in inconsistencies or nonsensical answers.

There are a few known ways to reduce hallucinations in LLMs.

One of the significant ways to reduce hallucination in LLMs is to improve the training data. More diverse and higher-quality data can lead to more accurate and less hallucinatory responses.

Detailed human supervision, especially during fine-tuning, can also help mitigate hallucinations. Humans can provide real-time feedback, correct inaccuracies, and enhance the model’s ability to provide reliable responses.

Some architectural changes can potentially mitigate hallucinations, such as creating models that are better at maintaining a coherent narrative over long stretches of text.

A well-designed prompt can guide the LLM into providing accurate, on-topic, and non-hallucinatory responses. So understanding how prompt design affects AI responses is crucial.

Prompting

A prompt is a short piece of text that is used to guide an LLM in generating a response. The idea is to give the model context or direction for what kind of text it should generate. Without a prompt, a language model wouldn’t know where to start.

A well-designed prompt can guide the LLM to generate good and accurate responses and also reduce or eliminate hallucinations. Here are some tips for writing good prompts:

- It’s obvious, but sometimes we forget to write simply and give clear instructions. Ambiguous prompts can result in interesting responses, but they might also give wrong responses.

- Use delimiters to indicate different parts of the prompt clearly. When writing prompts, you would often have the instructions and data. If you separate them clearly, the LLM will not be confused and mix them up to create hallucinations.

Summarize the text delimited by double square brackets into a single

short sentence of not more than 10 words.

[[ It was the best of times, it was the worst of times, it was the age of

wisdom, it was the age of foolishness, it was the epoch of belief, it

was the epoch of incredulity, it was the season of Light, it was the

season of Darkness, it was the spring of hope, it was the winter of

despair, we had everything before us, we had nothing before us, we were

all going direct to Heaven, we were all going direct the other way--in

short, the period was so far like the present period, that some of its

noisiest authorities insisted on its being received, for good or for

evil, in the superlative degree of comparison only.]]

The period was paradoxically marked by extreme contrasts and similarities.

- Ask the LLM to check for conditions before providing the appropriate response. Checking for conditions can stop the LLM from making things up when it doesn’t know the answer.

Summarize the text delimited by double square brackets into a single short

sentence of not more than 10 words. If it's already a single sentence that

is less than 10 words, just say, "It's already summarized."

[[The period was paradoxically marked by extreme contrasts and

similarities.]]

It's already summarized.

- Give examples of the responses you want before making the request. You can guide the LLM to respond t by giving the LLM examples.

Give me key information about a city in a single short sentence.

For example:

Me: "Tell me about Paris."

You: "It is a city of romance and history."

Me: "Tell me about London."

You: "It's a city of cultural diversity and landmark buildings."

Me: "Tell me about Singapore."

You:

"Singapore is a vibrant city-state renowned for its cleanliness

and multiculturalism."

- Provide steps to complete the task. If you know the exact steps you want the LLM to do to get the final answer, it helps if you can provide those steps to guide it along the way.

- Iterate and refine the prompt. Prompt writing is often iterative. You will write a simple one, then refine it over and over again, adding details and clarity until you achieve the kind of response you want.

Wrap up

ChatGPT and other LLM chatbots like Bard and Claude are fascinating. Looking under the hood to understand the basics of how it works can give us better insights to make it work better for us.

Happy chatting!